下载源码

curl http://pkgconfig.freedesktop.org/releases/pkg-config-0.29.2.tar.gz -o pkg-config-0.29.2.tar.gz

编译

tar -xf pkg-config-0.29.2.tar.gz cd pkg-config-0.29.2 ./configure --with-internal-glib make继续阅读“macOS pkg-config编译”

xuenhua’s 站点

curl http://pkgconfig.freedesktop.org/releases/pkg-config-0.29.2.tar.gz -o pkg-config-0.29.2.tar.gz

tar -xf pkg-config-0.29.2.tar.gz cd pkg-config-0.29.2 ./configure --with-internal-glib make继续阅读“macOS pkg-config编译”

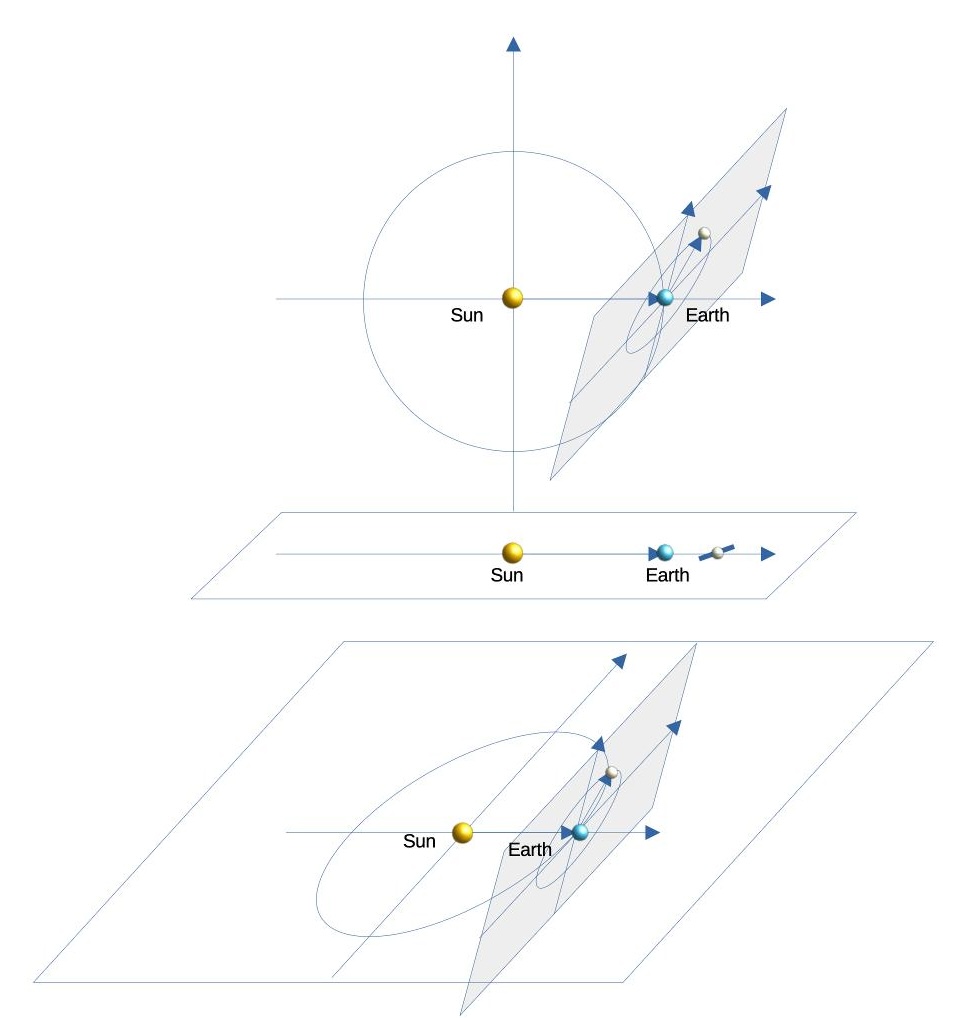

地球公转周期 365.2564 天

月球公转周期 27.32166 天

月球轨道面与地球轨道面交角

地球和月球都是椭圆轨道,但是考虑离心率都很小,可以近似为圆形。

计算月食或者日食即计算向量 和 的夹角如果接近0,即太阳、地球、月球一线月食发生。类似,若夹角180度,即日食发生。

秦·李斯

臣闻吏议逐客,窃以为过矣。昔缪公求士,西取由余于戎,东得百里奚于宛,迎蹇叔于宋,来丕豹、公孙支于晋。此五子者,不产于秦,而缪公用之,并国二十,遂霸西戎。孝公用商鞅之法,移风易俗,民以殷盛,国以富强,百姓乐用,诸侯亲服,获楚、魏之师,举地千里,至今治强。惠王用张仪之计,拔三川之地,西并巴、蜀,北收上郡,南取汉中,包九夷,制鄢、郢,东据成皋之险,割膏腴之壤,遂散六国之从,使之西面事秦,功施到今。昭王得范雎,废穰侯,逐华阳,强公室,杜私门,蚕食诸侯,使秦成帝业。此四君者,皆以客之功。由此观之,客何负于秦哉!向使四君却客而不内,疏士而不用,是使国无富利之实,而秦无强大之名也。(缪 一作 穆)

今陛下致昆山之玉,有随、和之宝,垂明月之珠,服太阿之剑,乘纤离之马,建翠凤之旗,树灵鼍之鼓。此数宝者,秦不生一焉,而陛下说之,何也?必秦国之所生然后可,则是夜光之璧不饰朝廷,犀象之器不为玩好,郑、卫之女不充后宫,而骏良駃騠不实外厩,江南金锡不为用,西蜀丹青不为采。所以饰后宫,充下陈,娱心意,说耳目者,必出于秦然后可,则是宛珠之簪、傅玑之珥、阿缟之衣、锦绣之饰不进于前,而随俗雅化佳冶窈窕赵女不立于侧也。夫击瓮叩缶,弹筝搏髀,而歌呼呜呜快耳者,真秦之声也;《郑》《卫》《桑间》《昭》《虞》《武》《象》者,异国之乐也。今弃击瓮叩缶而就《郑》《卫》,退弹筝而取《昭》《虞》,若是者何也?快意当前,适观而已矣。今取人则不然,不问可否,不论曲直,非秦者去,为客者逐。然则是所重者,在乎色、乐、珠玉,而所轻者,在乎人民也。此非所以跨海内、制诸侯之术也。

臣闻地广者粟多,国大者人众,兵强则士勇。是以太山不让土壤,故能成其大;河海不择细流,故能就其深;王者不却众庶,故能明其德。是以地无四方,民无异国,四时充美,鬼神降福,此五帝三王之所以无敌也。今乃弃黔首以资敌国,却宾客以业诸侯,使天下之士退而不敢西向,裹足不入秦,此所谓“藉寇兵而赍盗粮”者也。(太山 一作:泰山;择 一作:释)

夫物不产于秦,可宝者多;士不产于秦,而愿忠者众。今逐客以资敌国,损民以益仇,内自虚而外树怨于诸侯,求国无危,不可得也。

我们经常收到银行的贷款推销,有的利息较高,我们通常就直接拒绝了,但是有些看似利息较低甚至可以“赚差价”的,没有仔细计算就接受了。如果仔细计算,就会发现其实中了圈套。

真实案例:

贷款分12期还款,贷款10000的话,每月还款848.333(等额本息),总计还款10180,年化利率1.8%?虽然1年期存款利率目前已经进入1时代,但是有些小银行或者理财产品可以做到2左右。按2%的平均利息水平粗略一算,10000块存起来可以赚200利息,贷款利息180,还能赚20块钱。聪明的读者,这样算对吗?能赚到利息差价吗?

乍一看,好像是赚了20块钱,但实际上赔了73块。下面我给大家详细算一下。

继续阅读“银行推销的“低息”贷款到底划算吗?”继续阅读“AI视频制作之胖喵年检记”近期胖橘猫的视频火爆网络,紧跟热点新闻话题,胖橘猫扮演多种角色,如做手术的橘猫、少林橘猫、踩缝纫机的橘猫等等,形象可爱,情节紧跟时事,深得网友喜欢。 这种视频是如何制作的呢?下面介绍一下如何制作一个胖橘猫视频。

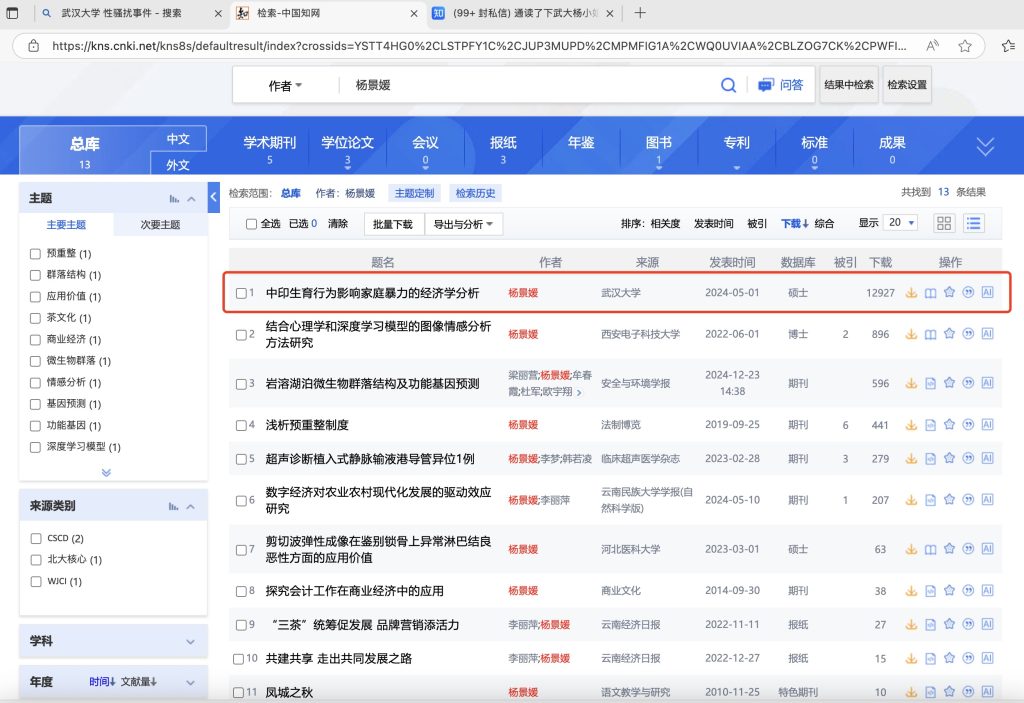

武汉大学优秀硕士论文pdf,知网下载量高达12927,还在不断增长中,大胆猜测应该是知网建网以来最大下载量。知网下载收费,从B站视频整理成pdf,分享给大家欣赏。【下载 】

声明:仅供学习交流使用,请勿用做它途。如侵犯知识产权,请联系删除。

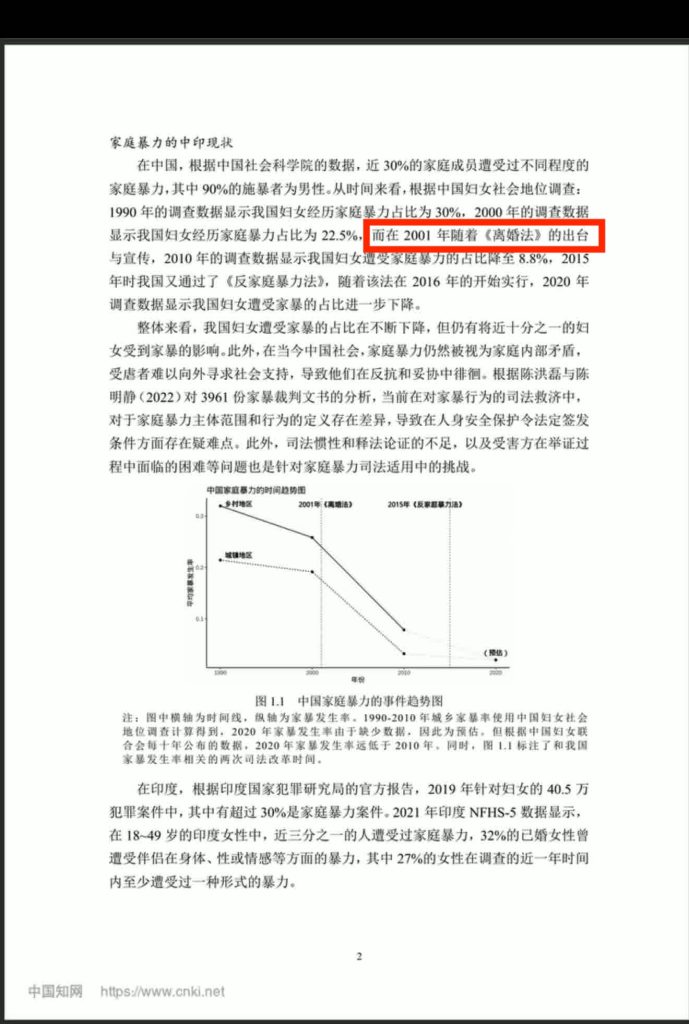

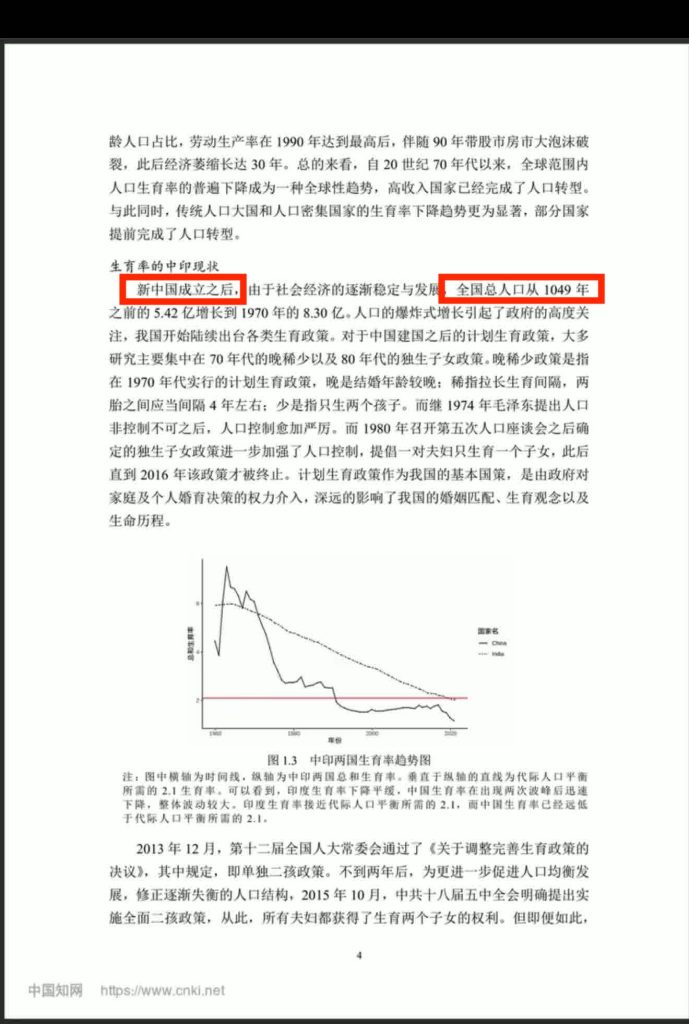

正文第2页 编造了《离婚法》,第4页新中国成立时间写成1049年,这种文章也能被中国知网收录!