0. 实现思路

- 使用Inkscape创作Logo图形

- 使用Blender生成动画

- 使用iMovie 等工具视频剪辑

1. 动画设计

花瓣逐渐选择聚合,并有清新的提示音

2. Inksacpe绘制图形

我们设计这样一个图形

使用Inksacpe 画出矢量图

继续阅读“使用Inkscape Blender FFmpeg iMovie制作Logo动画视频”

xuenhua’s 站点

花瓣逐渐选择聚合,并有清新的提示音

我们设计这样一个图形

使用Inksacpe 画出矢量图

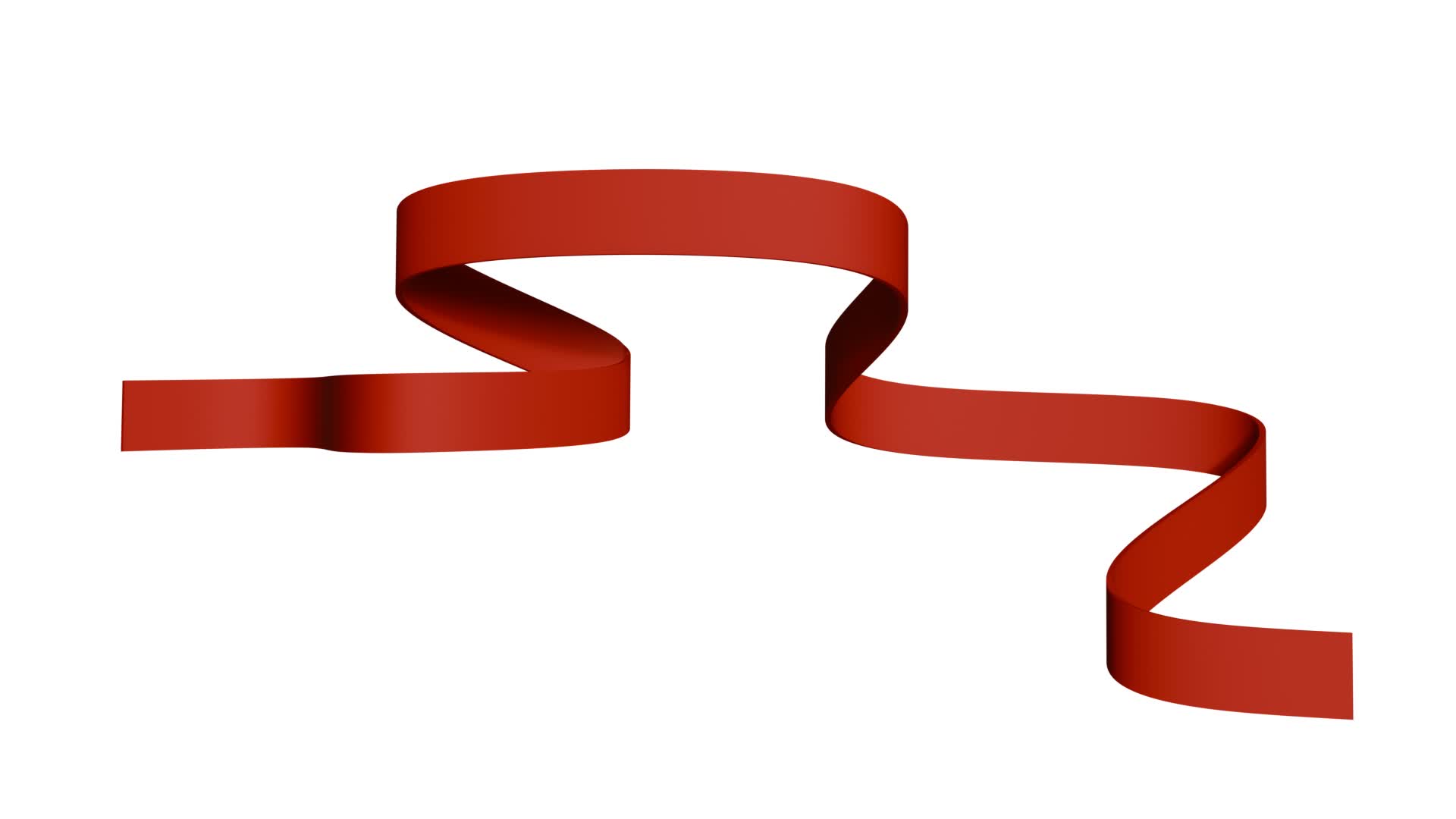

继续阅读“使用Inkscape Blender FFmpeg iMovie制作Logo动画视频”在制作海报时经常会需要彩带素材,彩带制作实现可以有多种实现方式,可以使用GIMP/InkScape/PS/AI等工具绘制,绘制多少需要一些美术功底,比如阴影,透视、渐变效果等等,这样就有些麻烦了。既然是要3D的效果,那Blender更专业。如何用Blender做出下图这种效果呢?

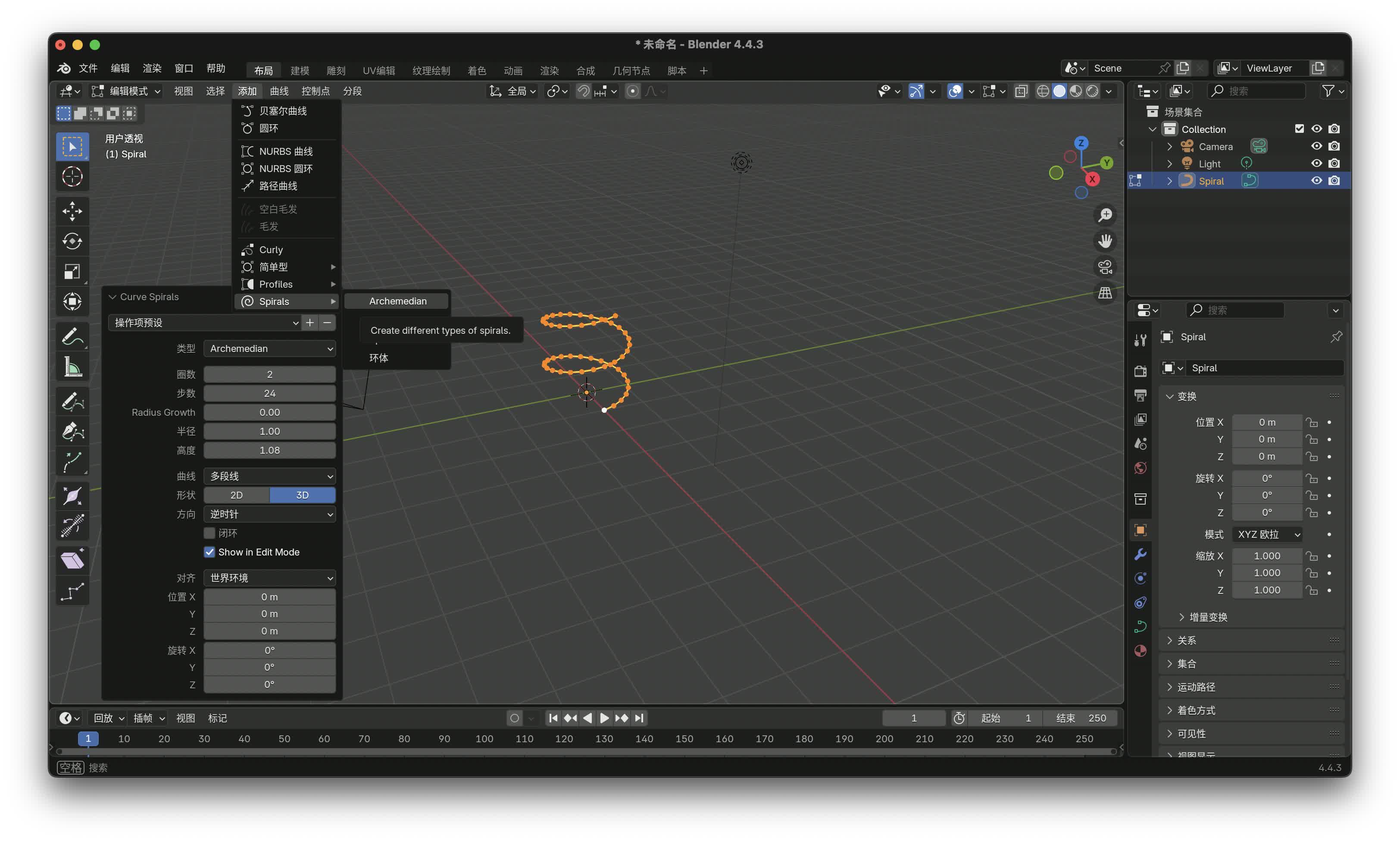

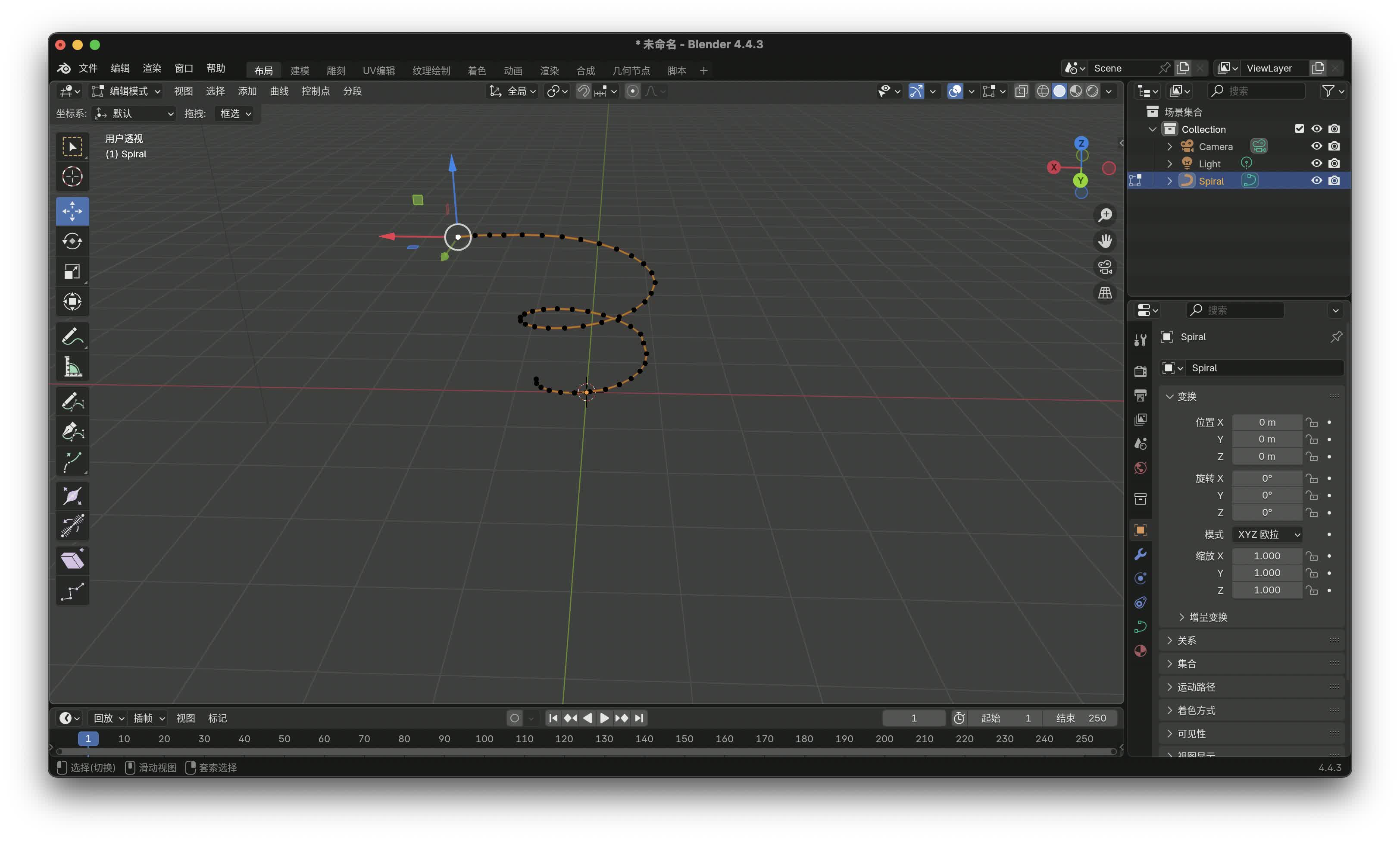

绘制曲线Blender有多种工具,可以用自带的贝塞尔曲线、NURBS曲线,也可以安装Extra Curve Object、 Extra Mesh Object插件,可以很方便的得到螺旋线等曲线。然后微调曲线的形状达到我们需要的效果。我们这里使用Extra Curve Object插件的螺旋线。螺旋线太规则,可以选中个别点进行微调。

vi pydata_sphinx_theme/theme/pydata_sphinx_theme/components/theme-version.html

<p class="theme-version">

备案许可证编号:<a href="http://beian.miit.gov.cn/">京ICP备000000号</a>

</p>

<p class="theme-version">

{% trans theme_version=theme_version|e %}Built with the <a href="https://pydata-sphinx-theme.readthedocs.io/en/stable/index.html">PyData Sphinx Theme</a> {{ theme_version }}.{% endtrans %}

</p>diff --git a/docusaurus.config.js b/docusaurus.config.js

index 31c32b2..dcfd863 100644

--- a/docusaurus.config.js

+++ b/docusaurus.config.js

@@ -179,7 +179,7 @@ const config = {

],

},

],

- copyright: `Copyright © ${new Date().getFullYear()} XXXXX. Built with Docusaurus.`,

+ copyright: `Copyright © ${new Date().getFullYear()} XXXXX. Built with Docusaurus.<br />备案许可证编号:<a target="_blank" rel="noopener noreferrer" href="http://beian.miit.gov.cn/">京ICP备00000000号</a>`,

},

prism: {

theme: prismThemes.github,sphinx-tabs 复合页签MySt Admonitions不能正常显示。

::::{tabs}

:::{tab} attention

:::{attention}

attention

:::

:::

:::{tab} caution

:::{caution}

caution

:::

:::

::::diff --git a/sphinx_tabs/static/tabs.js b/sphinx_tabs/static/tabs.js

index 48dc303..163ea57 100644

--- a/sphinx_tabs/static/tabs.js

+++ b/sphinx_tabs/static/tabs.js

@@ -89,6 +89,9 @@ function selectTab(tab) {

tab.setAttribute("aria-selected", true);

// Show the associated panel

+ document

+ .getElementById(tab.getAttribute("aria-controls"))

+ .parentNode.removeAttribute("hidden");

document

.getElementById(tab.getAttribute("aria-controls"))

.removeAttribute("hidden");