实验

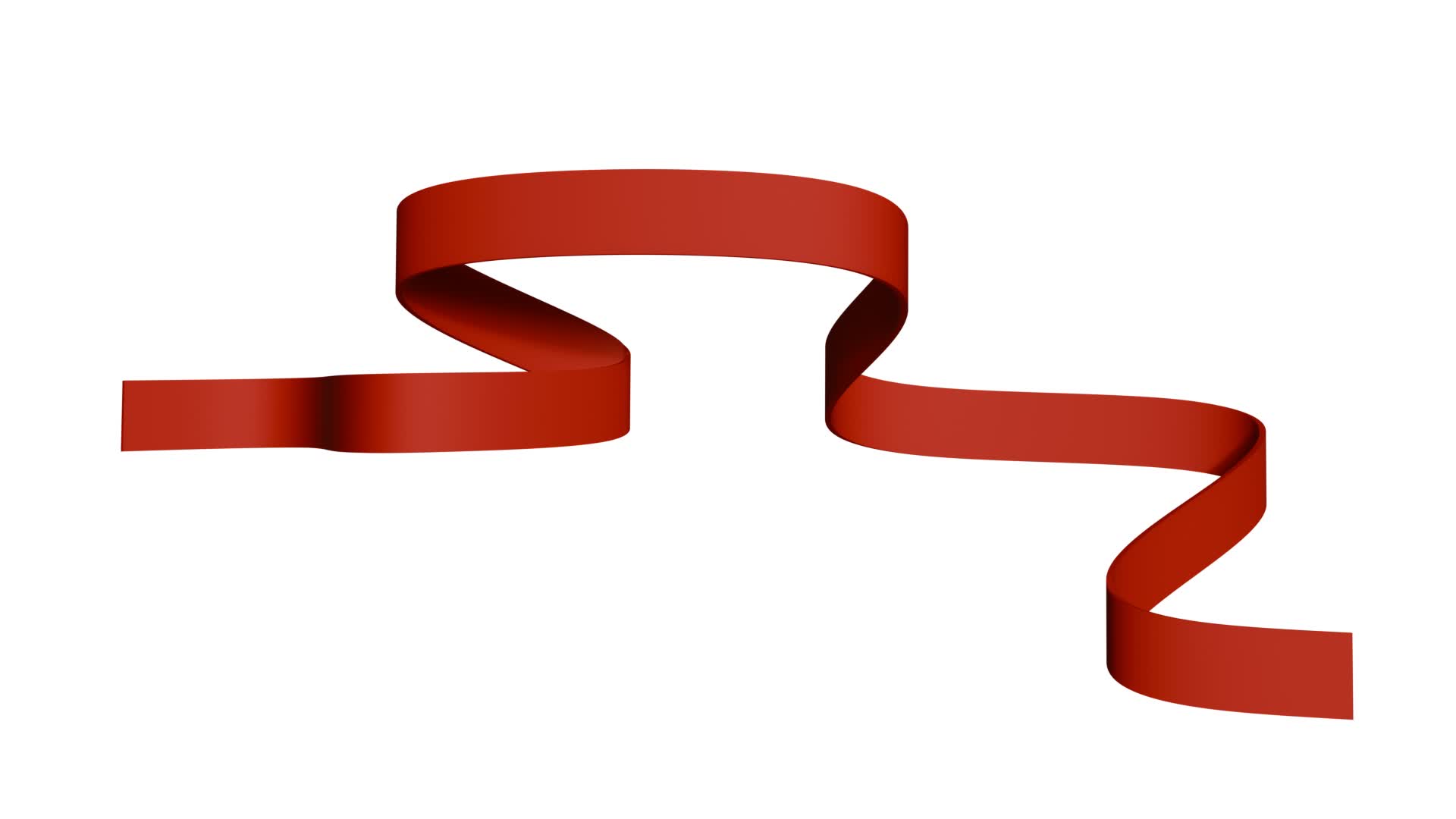

老外做过类似的实验

实验结果是真的,不是合成的假视频。

疑问

为什么底下的物体几乎不动呢?我们先从实验结果出发用物理定律解释。

1. 从实验结果解释

把A、B和弹簧整体作为研究对象,手松开时

根据牛二定律

从实验现象 aB=0 , 那么在 的情况下,是可以存在的,B静止是合理的。

2. 内力分析

但是总感觉反直觉。手松开的一瞬间,弹簧形变不会瞬间消失(小米汽车瞬间刹停是个例外),此时B还是合力为0,A的合力为自身重力加上B和弹簧的重力,所以加速度会大于重力加速度。在一瞬间之后的另一瞬间,A下落了一个高度,弹簧形变改变,此时弹簧的拉力小于了B和弹簧的重力了。那紧邻的下方弹簧就会略微收缩,接着向下传递。当传递到B时,B就会运动了。那么现在的问题就成了弹簧形变向下传递的速度多少?如果速度特别快,B静止的时间就会很短,现象就越不明显。必须用更慢的回放才能观察到,或者增加弹簧长度。那这个传递速度取决于什么呢?老外的实验,两端没有重物,只有弹簧。那大概可以猜出应该和弹簧的硬度和质量有关。

纵波

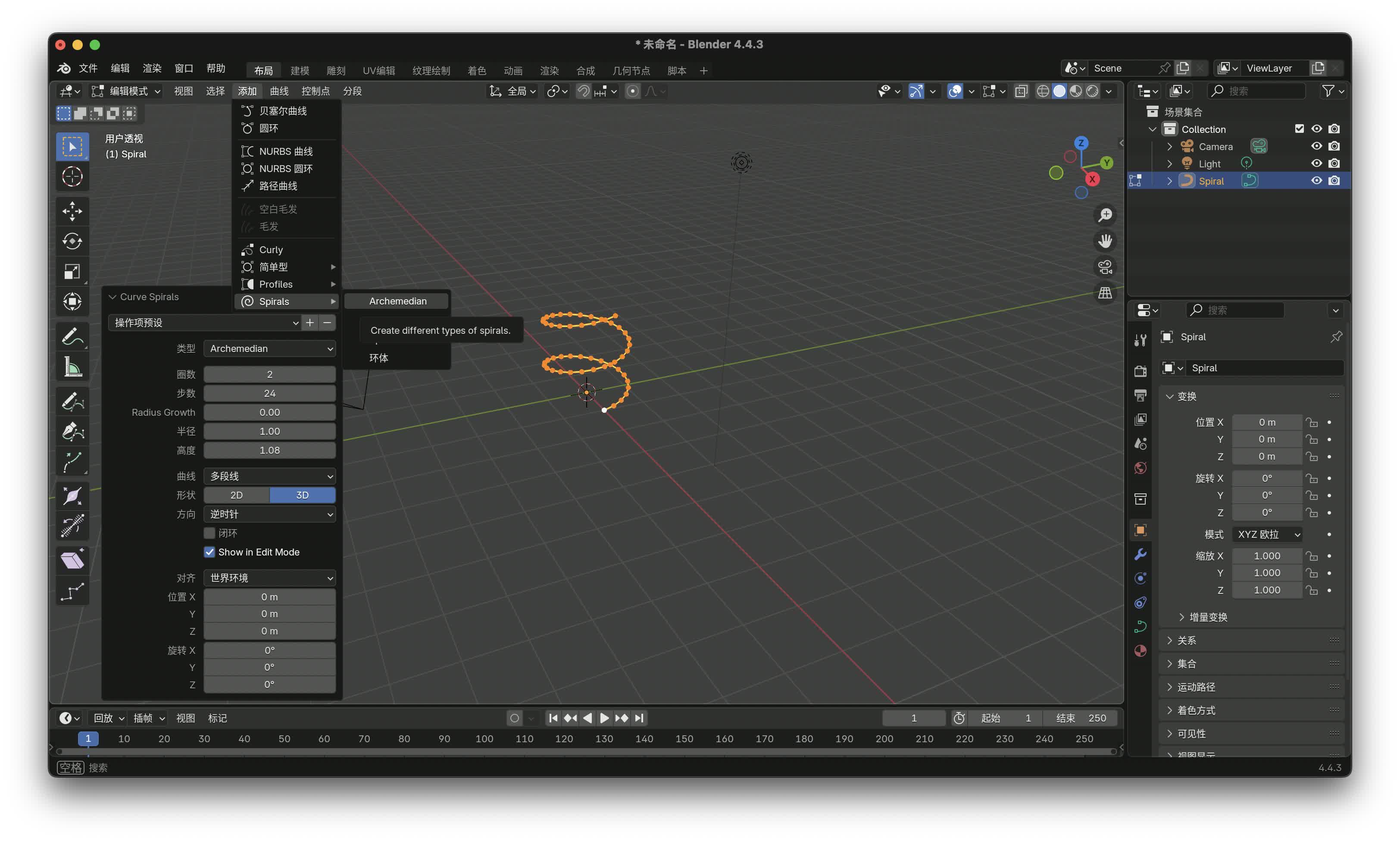

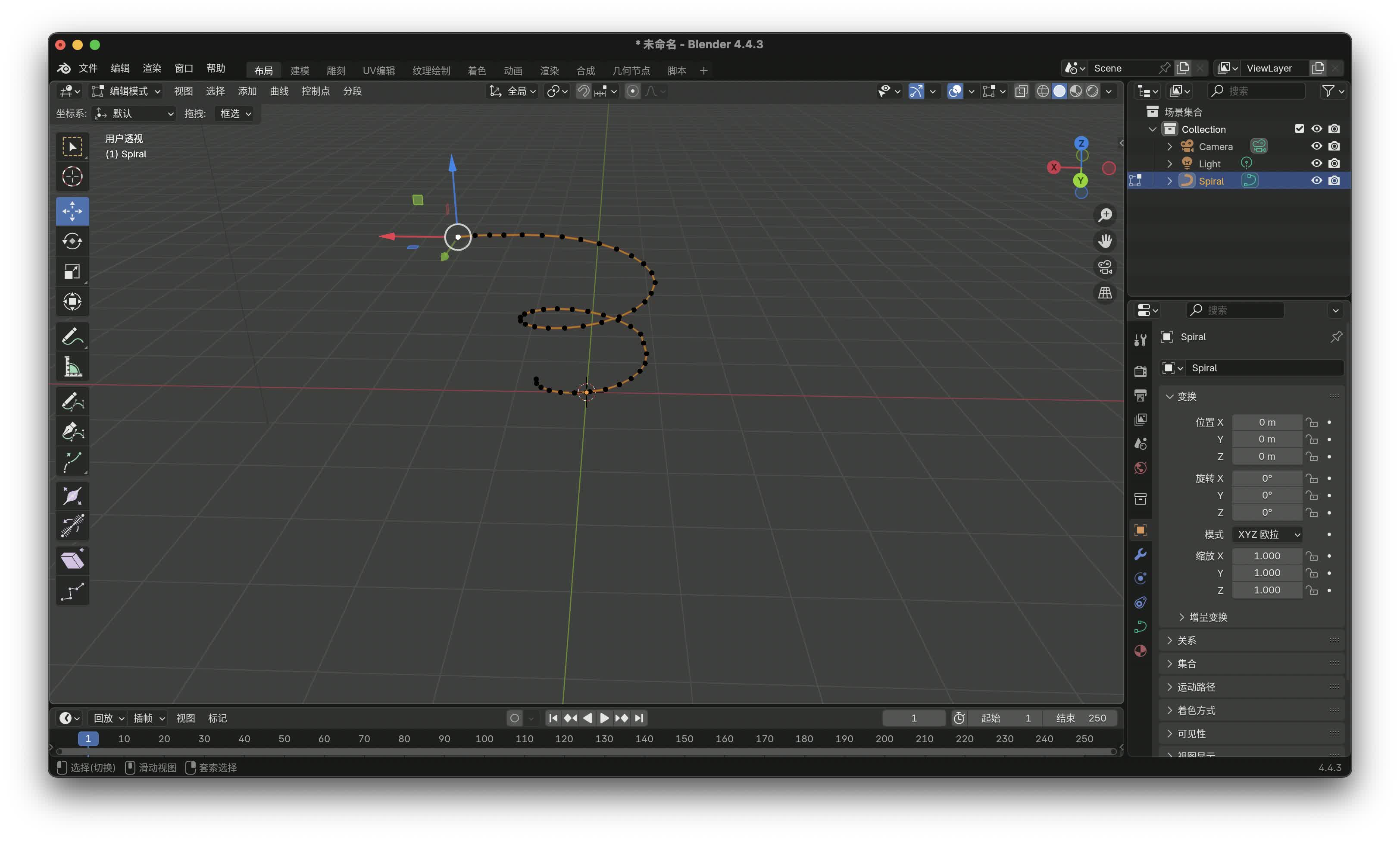

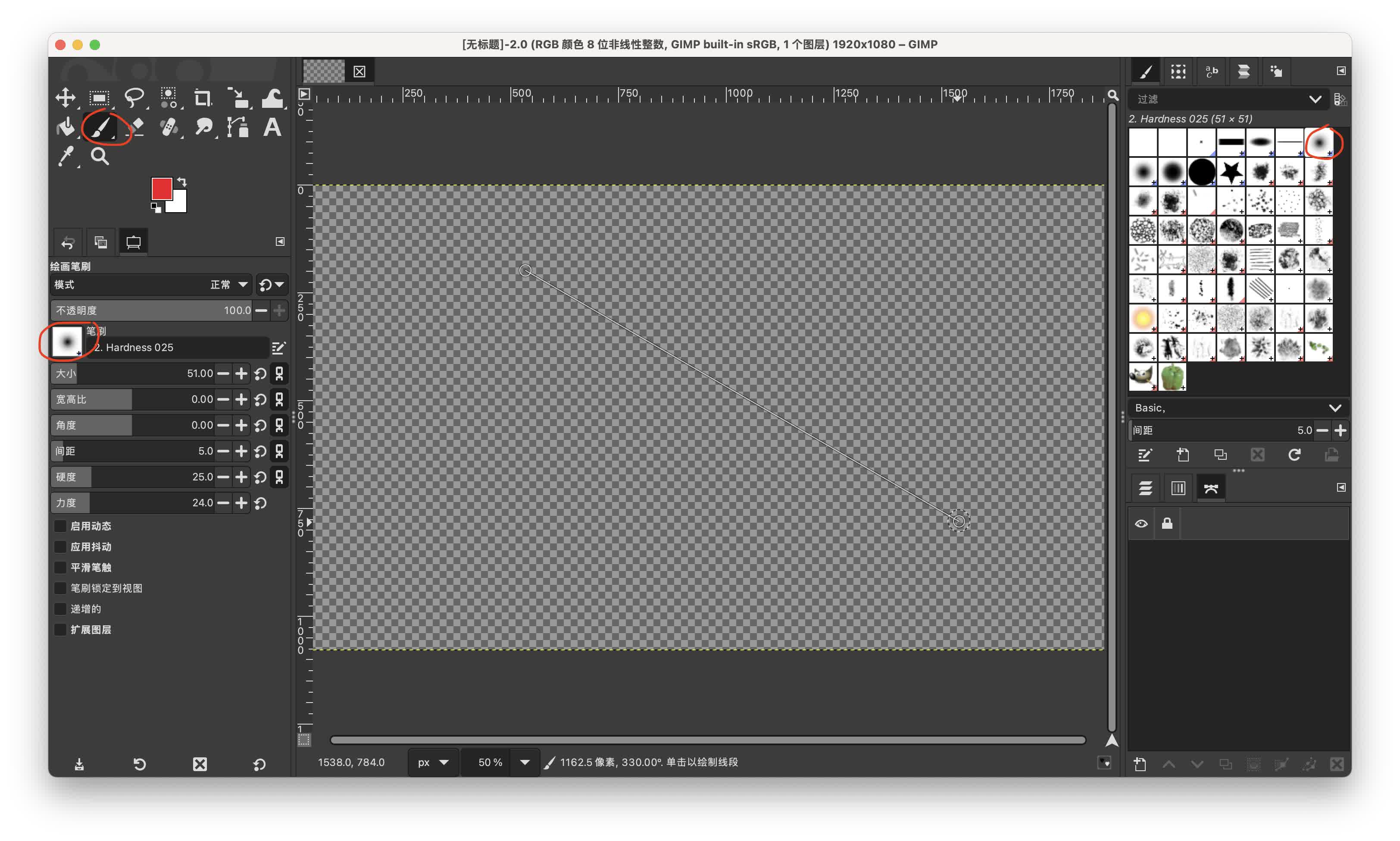

上面提到的弹簧形变的传播速度,本质是纵波的传播速度,现在问题归结为纵波速度问题。我们听的声音就是纵波,弹簧中纵波和声音运动学本质相同,只不过是传递介质不同而已。下面是弹簧纵波的实验。

好在前人早已研究明白了,推导过程挺复杂,感兴趣的自己去查阅,这里直接给出公式

其中 是杨氏模量, 是弹簧密度。

这就好理解了,弹簧纵波传播速度决定于弹簧的硬度和密度。弹簧越软, 越小,弹簧密度越大,波速越小。这也就是为什么实验用的弹簧都是特别软的那种,实验1两端有重物,实验2弹簧更软,从而降低 ,在波到达底部之前的一段时间下方静止, 越小,静止的时间越长,实验效果越明显。